YmeetMe just reached 1 million users last month, after 2 years since our official launch. As the number of users grow, the size of the database also quickly increases.

The most notable one is LIKE table. We store LIKE from a user to another user in MySQL table. It grows by 500,000 records per day and now we have more than 70 million records in a table. Now it does not fit into our RAM which puts us a huge performance penalty.

There are several ways to cope with such situation. Using appropriate NoSQL database can be one of them. However, as LIKE table is one of our core table, historically we have queries that joins LIKE tables. So moving LIKE table to other storage means rewriting all queries which is so straightforward.

So in our case we decided to use Innodb Table Compression.

What is Table Compression?

Simply put, it compress the table using LZ77 algorithm. So obviously we need less disk size. It means we will need less disk I/O (typically half). Moreover InnoDB buffer pool will keep the compressed data instead of expanded data. So we can place more data into buffer pool cache. It will significantly reduce the chance of disk read.

On the other hand, more CPU computation for encode/decode will be required. So if you have enough RAM, compressing data will just put you some computation overhead without reducing disk IO. But if your data does not fit into RAM, the overhead is negligible compared to time taken for the disk read. Generally speaking, scaling CPU is easier than scaling RAM or disk IO performance. So it can be beneficial if your data is big.

Table Compression works the best when you only issue select query to the table. The overhead of UPDATE is a bit bigger than SELECT or INSERT as it requires reorganization of compressed data.

How to enable compression?

It is pretty easy to do it. You can just run ALTER TABLE statement.

ALTER TABLE `table` ROW_FORMAT=COMPRESSED KEY_BLOCK_SIZE=8;

Then it will create a compressed data file for the table. The time takes totally depends on the disk space consumed by the table. Fortunately this operation does not lock the table so you don’t need to worry about the downtime even if your table is huge.

You can see how data size is reduced as follows.

Before compression

mysql > select table_name,engine,avg_row_length,data_length from information_schema.tables where table_name = 'likes'; +------------+--------+----------------+-------------+ | table_name | engine | avg_row_length | data_length | +------------+--------+----------------+-------------+ | likes | InnoDB | 78 | 5304745984 | +------------+--------+----------------+-------------+ 1 row in set (0.00 sec)

After compression

mysql> select table_name,engine,avg_row_length,data_length from information_schema.tables where table_name = 'likes'; +------------+--------+----------------+-------------+ | table_name | engine | avg_row_length | data_length | +------------+--------+----------------+-------------+ | likes | InnoDB | 41 | 2853175296 | +------------+--------+----------------+-------------+ 1 row in set (0.00 sec)

As you can see, the average row length has been reduced to nearly half.

KEY_BLOCK_SIZE?

But what is KEY_BLOCK_SIZE in the ALTER TABLE statement? This is what you need to understand when you enable compression.

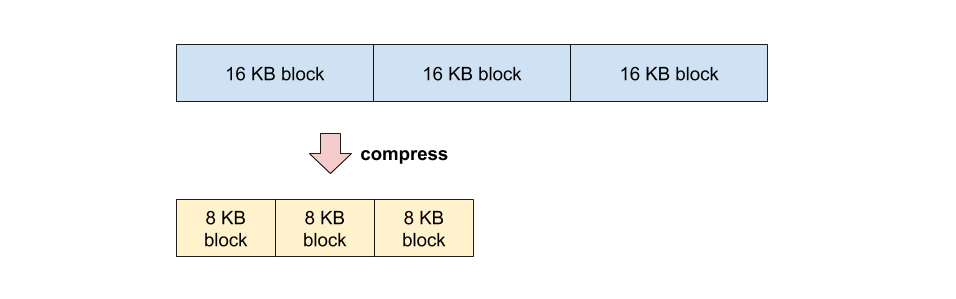

MySQL always read/write disk data by Page. A page is a block of disk space with 16 KB size by default. So if your query requires a bit of data on the disk, MySQL always read a 16 KB block that contains the data you want. Because this concept of block is a fundamental component of MySQL query algorithm, it must keep it after compression is enabled. It means MySQL will compress data by block. And all the compressed blocks of a table must have the same size on disk. The block size after compression is KEY_BLOCK_SIZE.

So it means even if a 16 KB block can be compressed to 1 KB, it still consumes 8 KB block size after compression. I think it is a reasonable technical tradeoff that keeps the architecture simple.

So if you are very sure that all blocks can be compressed to less than 4 KB, you can set KEY_BLOCK_SIZE=4. And setting KEY_BLOCK_SIZE=16 almost means nothing if you use the default block size of 16 KB.

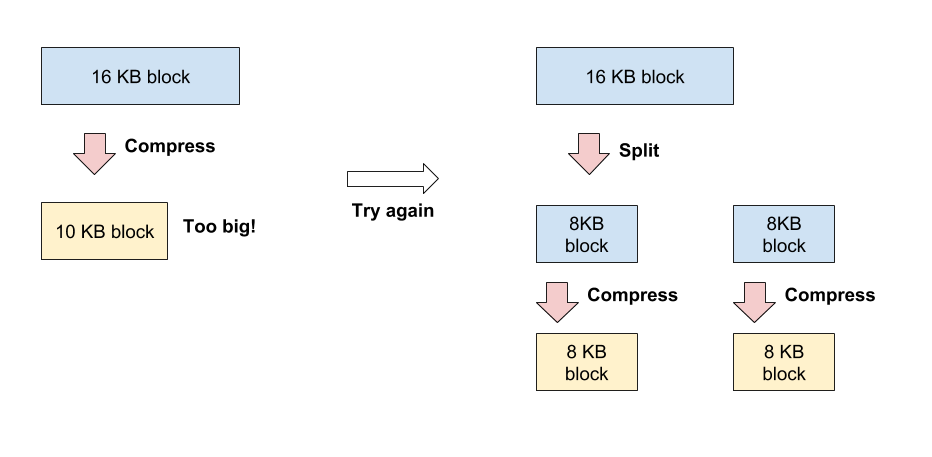

But what happens if the compressed block size was bigger than the KEY_BLOCK_SIZE? This is where a bit of trick happens. When we enable compression, MySQL tries to compress a whole block. But then if it finds that after compression the block size is bigger than KEY_BLOCK_SIZE, then MySQL cancel it and then split the original block into two half size block and then compress the each half. In this case it fails to reduce the disk size. This process is called relocation.

So when you compress a table, you must find the appropriate KEY_BLOCK_SIZE that is as small as possible but does not cause too many relocation. Generally you can try with 8 KB first. If large part of your table space is occupied by data of redundant format like XML or JSON, you can try with 4 KB.

You can find the best KEY_BLOCK_SIZE by experiments. After you run compression (I recommend to do it on your test environment first), you can see how many times relocation occured.

mysql> select * from information_schema.INNODB_CMP; +-----------+--------------+-----------------+---------------+----------------+-----------------+ | page_size | compress_ops | compress_ops_ok | compress_time | uncompress_ops | uncompress_time | +-----------+--------------+-----------------+---------------+----------------+-----------------+ | 1024 | 0 | 0 | 0 | 0 | 0 | | 2048 | 0 | 0 | 0 | 0 | 0 | | 4096 | 0 | 0 | 0 | 0 | 0 | | 8192 | 5587233 | 5495587 | 2641 | 2147483647 | 699380 | | 16384 | 0 | 0 | 0 | 0 | 0 | +-----------+--------------+-----------------+---------------+----------------+-----------------+ 5 rows in set (0.00 sec)

compress_ops means the number of compression and compress_ops_ok means the number of successful compression without relocation. In this case, our success rate is 98.4% so it is very good.

When to use Table Compression?

As a rule, you can use Table Compression when

- Your data size is too big to fit into RAM

- Your data contains somehow repeated data

When you should not use compression

- When your data size is small

- When you frequently UPDATE

- You store random data or blob like pictures

- CPU usage of MySQL server is already very high

Instantly create an app for your website with the click of buttons! Appy Pie, is an unrivalled leader in the mobile app bandwagon that allows everyone to transform their app ideas into reality, without any technical knowledge.

Simply drag and drop the features and create an advanced Android or iOS application for mobiles and smartphones, as easy as a pie.

Start creating your app on the fly & even convert your website into an app with Appy Pie.

http://www.tkqlhce.com/click-8095449-11578080